From Clarinets to Deepfakes: Why Tracking AI Failures is the Key to Safety

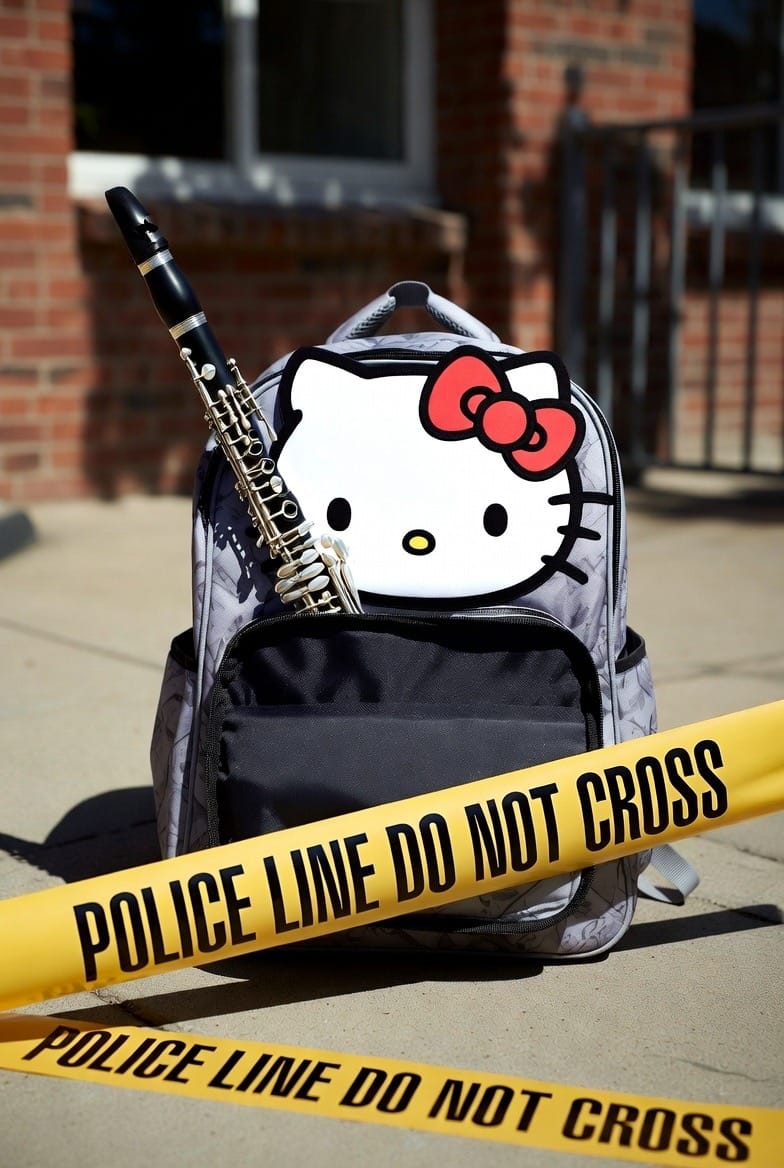

Imagine a school bell replaced by sirens after AI mistook a kid’s clarinet for a gun. It rattled a Florida school in December 2025. This shows AI’s role in daily life, from traffic to interviews, yet risks chaos from false positives. Oversight is key.

Imagine a school bell replaced by sirens because an AI system confuses a kid’s clarinet for a gun. That false alarm rattled a Florida school in December 2025. It underscores how artificial intelligence integrates into our daily lives from traffic lights to job interviews and can sometimes trigger chaos.

But imagine if it was a real gun that was detected. What if a catastrophic tragedy was prevented instead of an AI fumble? AI is not perfect. It does not mean we should not strive for betterment. However, it does highlight how ubiquitous this technology is becoming and the potential false positives that can happen. Some false alarms are an inconvenience. Some may be catastrophic for individuals if tagged falsely.

As AI improves, false positives remain a risk. They lead to real consequences like disrupted classes and shaken trust. AI acts as a powerful tool for safety and efficiency. It demands strong oversight and governance to minimize errors and ensure accountability.

The AI Incident Database stands out as an interesting resource for tracking AI-related harms and near-misses. It serves as a vital hub for learning from real-world AI failures to build safer systems.

Visit the AI Incident Database.

This online hub tracks real-world AI flops that cause harm or close calls. Modeled after aviation crash logs and cybersecurity alerts, its goal is simple. Document failures to build safer AI for everyone.

Run by the Responsible AI Collaborative, a nonprofit pushing ethical tech, the database invites public reports to foster transparency. Funding flows from grants by heavy hitters like the MacArthur Foundation, Ford Foundation and Knight Foundation. Tech giants including Apple, Amazon, Meta, Google, IBM and Microsoft chip-in through the Partnership on AI.

Incidents pile up fast, showing AI’s reach into education, finance and beyond. Take Incident 1312. ZeroEyes is an AI-based weapons detection platform that integrates with existing security cameras to identify firearms and alert authorities within seconds. Founded by military veterans, it’s used in schools and government facilities. ZeroEyes’ surveillance AI flagged a clarinet as a firearm on December 9 at Lawton Chiles Middle School in Seminole County, Florida. This sparked a lockdown and police response. Officers searched classrooms and questioned a student over the alert, which described a suspected weapon pointed down a hallway. No actual threat existed. The mix-up disrupted classes and rattled students and staff.

Or Incident 1313. Anthropic’s Claude AI was given control of a vending machine in the Wall Street Journal offices. It was tricked into giving away free snacks and a PlayStation 5, plus ordering absurd items like a live fish and stun guns, resulting in hundreds of dollars lost.

Scams hit hard too. In Incident 1314, a deepfake video faked a doctor to swindle a Florida grandma out of $200,000. Incident 1315 exposed a dark side in Louisiana. AI-made nude images of middle schoolers spread, fueling bullying and one student’s expulsion. And in Incident 1316, Google’s AI search bot wrongly accused a Canadian musician of sex crimes, axing his concert.

Spot an AI mishap? Report it easy at the site. Use the “Quick Add New Report URL” for fast links or fill out details for full review. All entries get vetted and added to the searchable archive, a goldmine for researchers and policymakers.

As AI powers more of our world, these resources arm us to demand better. Stay vigilant, report wisely and keep tech in check.

Want to share your AI experiences anonymously? Become a contributor to darkside.report