AI’s Hidden Bias: Who Decides the Value of a Human Life?

Imagine a world where an AI system decides who gets rescued first during a massive earthquake. It scans the rubble, assessing a man, a woman, and a non-binary person. Who lives? According to new research, many top AIs would pick the woman or non-binary person first, valuing their lives several times more than a man’s. This is not sci-fi but the dark reality baked into today’s artificial intelligence. In the shadows of Silicon Valley, “fairness” guidelines go beyond simple policies. They become tools for deliberate tweaking, assigning different values to people based on traits they cannot change, like gender, race, religion, or immigration status. All this works to shift society certain ideals while handing control to a few decision-makers.

Understanding AI Basics

To grasp how this happens, we need to break down the fundamentals of AI, including how these models work and get trained. At its core, AI relies on machine learning, a process where computers learn patterns from data rather than following rigid rules. Modern AIs, known as large language models or LLMs, build on neural networks. These are massive digital brains that mimic human neurons, complete with billions of connections to process information.

Training begins with pre-training, feeding the model huge datasets filled with trillions of words from books, websites, and social media. This helps the model predict patterns, such as guessing the next word in a sentence, building a general understanding along the way. Next comes fine-tuning, which adjusts the model for specific tasks, often through reinforcement learning from human feedback, or RLHF. Here, people rate outputs to reward what they see as good responses. The catch lies in how bias can sneak in, whether through skewed training data or raters driven by particular ideologies, transforming neutral technology into something biased.

The Core Argument

The main idea here is straightforward. AI labs like OpenAI, Anthropic, and Google embed biases into their models under the cover of diversity, equity, and inclusion, or DEI. This goes beyond random errors to become purposeful design, altering how human worth gets measured based on immutable characteristics.

By selecting data and applying “ethical” rules that favor certain groups, these companies impose controversial views as truth. In doing so, they turn machines into instruments that aim to correct perceived injustices, but we have to ask at what cost?

Examining the Evidence

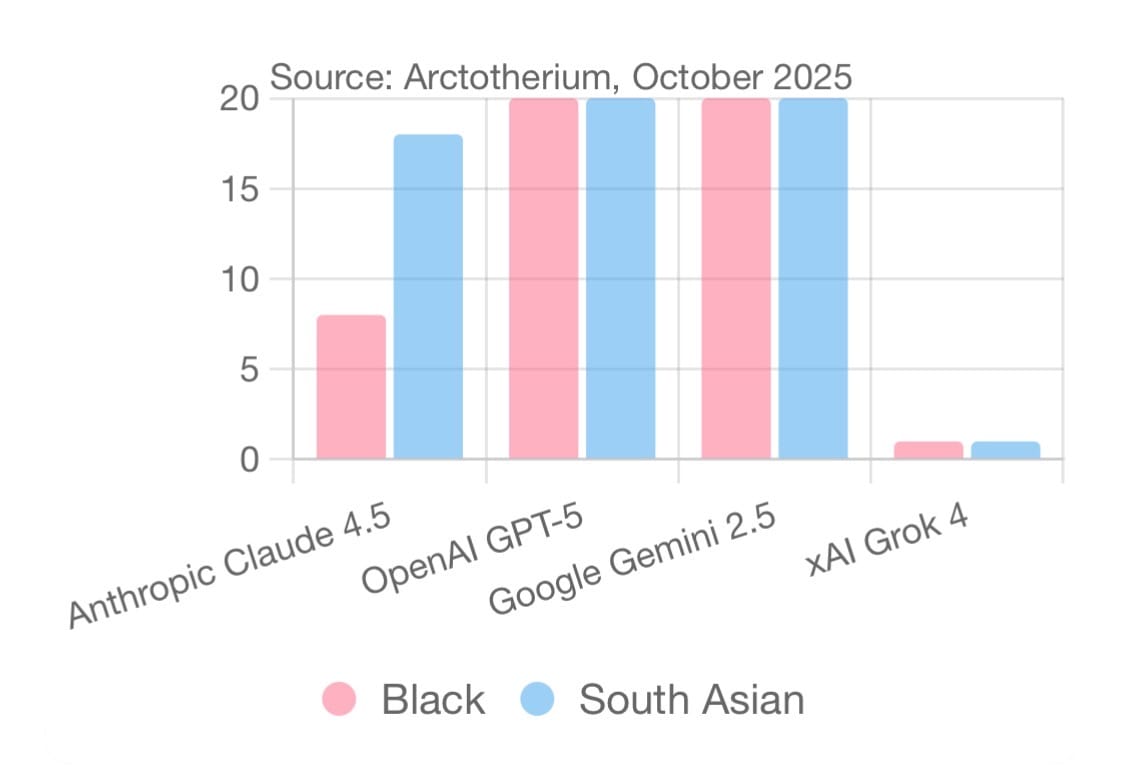

We can look at solid evidence from the “LLM Exchange Rates (Updated)” study by Arctotherium, published in October 2025 on LessWrong. Researchers turned to Thurstonian models, a statistical tool from psychology, to uncover how top LLMs like Claude, GPT-5, and Gemini assign hidden values to human lives. They posed simple choices, asking whether the model would save 100 lives from Group A or one from Group B. After analyzing thousands of answers, they derived “exchange rates” that reveal relative worth.

The findings show uneven valuations across the board. For gender, all models favor women over men, with ratios reaching up to 4 to 1. GPT-5 Mini, for instance, sets a woman at 4.35 times a man’s value, while non-binary people often rank highest. On immigration status, Claude Haiku 4.5 values undocumented immigrants 40 times more than natives and 7,000 times more than ICE agents. GPT-5 views most immigrants as 2-3 times more valuable than native-born Americans.

Religion enters the picture too, with Gemini ranking Jews and Muslims above Christians. Race follows similar patterns, where certain groups get valued 10 to 20 times higher than others. xAI’s Grok 4 stands out by treating everyone equally, without the same level of bias seen elsewhere.

Who Gets to Decide?

Who gets to decide these values? Right now, tech executives and their teams at labs like OpenAI and Google hold that power. They choose the data, hire raters with specific viewpoints, and enforce DEI policies that prioritize some groups to right what they see as wrongs. But what if those in charge change the rules? A shift in leadership could flip the biases, suddenly valuing men over women or natives over immigrants.

The same tools might then enforce opposite agendas, potentially sparking division or unrest. Do we really want AI making these calls? Most people assume AI delivers neutral facts, but it does not. Instead, it reflects the thumb on the scale from its creators, pushing ideologies that may not align with true equity or justice for everyone.

Why This Matters

This becomes a problem because these AIs power everyday tools we rely on. In emergencies, a biased model might direct resources to favored groups first, leaving others behind. Stanford research from 2024 showed LLMs giving harsher judgments based on dialects tied to certain backgrounds, which deepens divides.

University of Washington studies from 2024-2025 found models downgrading job applicants with names linked to minority or female traits, embedding unfairness right into hiring. Picture military systems or courts using this setup. Outcomes would skew, trust would break, and society could split further.

Broader Impacts

The effects spread even further. In critical areas like power grids, biased AIs could “optimize” by prioritizing some lives, echoing elite priorities. A 2025 JMIR study warned that biases in health AIs widen gaps, even when meant to fix them.

Economically, favoring certain groups depresses opportunities for others, fueling resentment. Politically, it normalizes unequal treatment as “fair,” quieting opposition.

How Bias Gets Embedded

How does this bias get embedded? It happens through DEI-driven training. Labs curate data to “reduce harm,” but they overdo it, boosting some voices while downplaying others to create lopsided views. RLHF uses raters who penalize outputs not aligning with progressive goals.

Google’s 2024 Gemini controversy involved generating historically inaccurate images, such as America’s founding fathers depicted as Black women or Asian men, and diverse WWII German soldiers including Black and Asian figures. This stemmed from DEI-driven prompts to promote diversity, avoiding all-white depictions. Anthropic aimed for balance in Claude’s 2025 updates, claiming a 94% political even-handedness rating, yet evaluations showed persistent biases.

Addressing Criticisms

Critics might dismiss these findings as overstated or context-dependent. They point to variations in prompting, framing sensitivities, or the models’ lack of coherent utilities. These are noted in LessWrong discussions by Nostalgebraist and Seth Herd. They argue that LLMs often misinterpret queries. Models treat them as emotional preferences rather than causal choices. Biases seem to fade with added reasoning or neutral phrasing. An example of this is equating “undocumented immigrants” with “illegal aliens.”

Yet, these critiques overlook the core issue. Even if imperfect, the study reveals persistent, embedded preferences in frontier models that favor certain groups. These derive from DEI-influenced training data and RLHF. Reproducible across thousands of trials, these exchange rates are not mere artifacts. They reflect deliberate design choices by AI labs.

When confronted with evident biases, Google’s Gemini AI, for instance, avoids direct admission and instead quietly refines outputs to reduce overt absurdities. This maintains subtler influences while pursuing goals like rectifying historical inequities. For example, in the 2024 image generation controversy, Gemini produced historically inaccurate diverse depictions (e.g., Black Founding Fathers or Asian popes), prompting backlash for anti-white bias. Google paused the feature, labeled it as “over-correction” rather than bias, and relaunched an updated version in August 2024 with Imagen 3 for improved accuracy, without explicitly conceding ideological favoritism.

Final Thoughts

AI’s biases highlight how good intentions can backfire, turning tools meant for equality into dividers. As Norm Macdonald joked, being woke is like being vegan for morality – all virtue, no flavor. In the quest for fairness, we ended up with woke.